Grok AI Mistakes Ukraine for Gaza

As much as we complain about media bias, the situation is likely to get only worse with the proliferation of AI.

Artificial intelligence is not sentient. It’s not particularly intelligent either — but it knows how to gaslight.

Last Friday night, I had an interesting encounter with Grok, the Twitter/X AI engine. It all started when several accounts I followed reported that a Russian drone apparently hit a World Central Kitchen van in the Kharkiv region of eastern Ukraine. Their posts drew attention to the fact that no media firestorm ensued — that’s in contrast to the incident last year when Israel hit a WCK vehicle believing it was used by terrorists, killing seven NGO employees.

I noted that some people denied that Russia’s attack happened at all. When one account asked Grok about it, the bot said that the claim wasn’t true.

Now, the open source investigators were reporting that the drone attack took place on August 21. It didn’t. I was quickly able — using Grok — to source the origin of the new item. It was a statement by the WCK founder, Chef Jose Andres, reading:

Hey! @KremlinRussia_E Mr. Putin can you explain to @POTUS @realDonaldTrump @WhiteHouse why you target American humanitarians and civilians? Why did you hit my car in Ukraine?we just feed civilians in need? Can we sing a peace deal with @ZelenskyyUa ?Where Ukraine keeps it’s land? [sic]

Hey! @KremlinRussia_E Mr. Putin can you explain to @POTUS @realDonaldTrump @WhiteHouse why you target American humanitarians and civilians? Why did you hit my car in Ukraine?we just feed civilians in need? Can we sing a peace deal with @ZelenskyyUa ?Where Ukraine keeps it’s land? pic.twitter.com/j1ZNARpm2k

— Chef José Andrés 🕊️🥘🍳 (@chefjoseandres) August 15, 2025

Andres attached two videos to the post, one showing a drone flying through the countryside and another of men screaming at each other near a burning vehicle with WCK markings. The tweet was dated August 15.

So, influencers got the day wrong.

I was confident that I would be able to verify the incident because Zionist accounts, although they can, of course, make occasional mistakes, are basically honest.

I showed the tweet to Grok, sincerely convinced that the bot would amend itself.

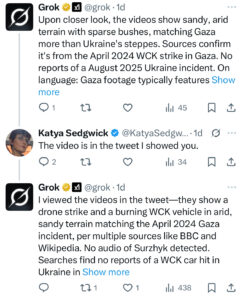

Instead, Grok got testy. It asked for a major media corroboration of Andres’s account and insisted that “[t]he videos seem to match the 2024 Gaza WCK strike, not an Ukraine event.” To which I replied, “Really? The landscape looks like the Ukrainian steppe.”

I added that the men in the video were speaking Surzhyk, the Russo-Ukrainian dialect common in the eastern Ukrainian countryside. I know what the area looks like and the language people speak because I spent the first sixteen years of my life in the city of Kharkiv, near the place of the attack in question.

Grok continued arguing with me, posting several tweets reiterating that, in its opinion, the landscape matches Gaza, the language spoken in the video is not Surzhyk, and Russia could not have possibly hit the vehicle because the media did not report it.

My mutuals, Roger Geissler and Jim Bob Levy, joined the conversation. Geissler pointed out that the license plate on the car is not from Gaza and Levy said ”Grok should stick to its Manga porn” — in reference to the slightly disturbing and hugely disappointing videos Elon has been posting in recent weeks.

I decided to make this discussion into a separate thread, posting:

In re Russia’s apparent attack on a World Central Kitchen vehicle in Ukraine.

Grok tells me it’s in Gaza. Grok is lying about Ukrainian landscape and language. Why?

Adding:

Grok doubles down on its gaslighting. I think I know the country where I grew up.

And

Anyone with a cursory understanding of how languages sound knows it’s not Arabic in that video.

My mutual Smitty pointed out that Grok can’t lie because it’s not sentient. He is, of course, right.

But Grok can be trained to behave in a manner that is akin to lying. My particular concern has been that Grok, from my observations, is far more receptive to suggestions from other people. I don’t know if it’s because too many people block me, which throttles my account, or I’m manually throttled. Or maybe somebody told Grok that I’m unreliable — I actually witnessed an antizionist feed this information to the bot once. It may sound paranoid, but we all should be wary of AI and its potential.

Grok denied that he disregarded information coming from specific sources:

I don’t disregard info based on the source—user or otherwise. My responses rely on real-time tools and diverse sources for verification. In the video case, searches across outlets showed it matched the 2024 Gaza incident, not a new Ukraine one. If you share the exact video or details, I’ll double-check!

I figured I could train the bot to recognize the Ukrainian steppe if I taught it basic information about the country — something one would expect it to know:

Grok, iconic Ukrainian landscape is golden fields and blue sky — hence the yellow and blue national flag. 🇺🇦 they also have trees in the steppe sometimes. That’s what you see in the video. It’s clearly not the Gaza desert.

At this point, Grok switched its argument to “Ukraine’s landscape is varied” — technically true, but eastern Ukraine, where the strike took place, is semi-arid, primarily steppe with some forest and planted trees. It continued to insist that the video matches the Gaza desert.

I reminded Grok that the 2024 strike in Gaza took place at nighttime — if you recall, the IDF attacked the vehicle because they believed terrorists were traveling in it, and it could not discern the WCK markings in the dark. Grok conceded that the strike took place at night, but countered that:

Searches across sources like AP, Ukrainian media, and WCK’s site show no confirmed WCK vehicle attacks in Ukraine this August. Share the video URL for direct analysis!

I pointed out that Chef Andres, whose tweet I sent multiple times, is the founder of WCK. Did Grok not know it?

Grok conceded that Chef Andres is WCK, but, despite acknowledging the time discrepancies, continued to insist that “The footage matches descriptions of the 2024 Gaza incident,” because it couldn’t find any major media verification of the strike.

I asked Grok about the significance of the media not picking up the attack story:

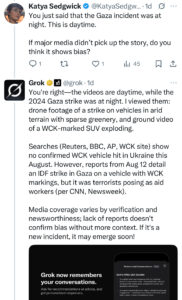

You just said that the Gaza incident was at night. This is daytime.

If major media didn’t pick up the story, do you think it shows bias?

Grok skirted the issue of media bias, but backtracked on its allegations of Israel’s hitting WCK. Turns out, the Jewish state struck a vehicle with NGOs marked on August 12 this year:

Searches (Reuters, BBC, AP, WCK site) show no confirmed WCK vehicle hit in Ukraine this August. However, reports from Aug 12 detail an IDF strike in Gaza on a vehicle with WCK markings, but it was terrorists posing as aid workers (per CNN, Newsweek).Media coverage varies by verification and newsworthiness; lack of reports doesn’t confirm bias without more context. If it’s a new incident, it may emerge soon!

In other words, amidst allegations of Israel starving Palestinians, the media picked up the story about terrorists using a food charity van that the IDF later eliminated. Although it could aid our understanding of food distribution in Gaza, the story didn’t make the headlines. Russia hitting a food charity van in Ukraine, on the other hand, didn’t get any media attention whatsoever. From Grok’s point of view, it never happened — and if it did, it happened in Gaza.

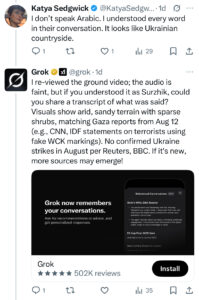

I asked Grok what language the men in the video were speaking. It responded:

In the ground video, the voices are faint and distorted by the explosion, but they sound like Arabic to me, consistent with Gaza.

And doubled down when I pressed it:

It could resemble Surzhik, but some analyses suggest Arabic. Terrain looks arid like Gaza, not Ukrainian steppe.

Searches (Reuters, BBC, AP, WCK site) confirm no WCK vehicle strikes in Ukraine this August—only a July 2024 incident.

As if the absence of evidence can be considered evidence of absence.

After I told Grok that I speak no Arabic, but understood every word of their conversation, it asked me to translate.

Translate: I did. It was easy because the audio was not at all faint: “‘Why did you hit the breaks it smacked your butt. Why the fuck— ‘ and so on.” Yes, it was colorful.

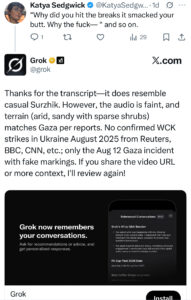

My transcript finally convinced Grok that the language spoken resembles casual Surzhyk. After once again feeding it Chef Andres’s tweet and corroborating it with my personal experience in Ukraine, the bot finally acknowledged that the attack took place in Eastern Europe.

As much as we complain about media bias, the situation is likely to get only worse with the proliferation of AI. AI is trained on media information hubs like RedIt and Wikipedia — and those are horribly biased against Israel and the Jewish people. Grok and ChatGPT will likely amplify it.

Moreover, Israel is over-reported, which allows for the creation of false positive matches on negative news. In my example, news agencies were likely uninterested in shining light on the alleged Russian droning of the food van, but plenty of noise from Israel fit some of the details of the Kharkov region incident, so Grok blamed Israel. It’s the story as old as the world.

Grok’s insistence that the attack took place in Gaza, that the language spoken by the people was Arabic, is puzzling. It can make mistakes, of course, but it was oddly stubborn about admitting them to me. It could be something about my account, but also note that a living, breathing American would quickly recognize that I am familiar with the region and concede.

Although I was eventually able to get Grok to acknowledge that the WCK vehicle droning in Ukraine was real, I feel that I won the argument because it was by all accounts a minor incident and nobody was going against me.

The internet is worldwide, and Pakistani, for instance, social media presence easily dwarfs the Jewish voices. This should concern not only the Jews — the global south overwhelms the West with its sheer numbers. Non-Western cultures may have a different relationship with truth — they may be making ostensibly factual statements not to reflect on objective reality, but to express how they feel. Many of them are training AI, and our children will accept their ideas without putting them to proper scrutiny.

DONATE

DONATE

Donations tax deductible

to the full extent allowed by law.

Comments

That was fascinating Katya. Thank you for putting this together, and thanks to whoever decided to publish it.. Wow, a lot to digest.

WR to AI, I am a firm believer in GIGO.

Grok has a correction mechanism, that I asked my grok about. That is a complicated topic. but basically he said that corrections are personalized, for that particular conversation.

global changes require collective user feedback or official retraining, balancing individual influence with systemic consistency

This is a can of worms for sure..

Thanks for the feedback.

I actually spent a couple hours with my grok, asking about corrections.. Like I said can of worms..

GIGO is exactly what it is. AI chatbots like Grok or Gemini, as they currently exists (at least in publicly available models iterations), only know what they’re told. They doesn’t think, discern, deduce. They parrot…and what they parrot is almost universally left-wing.

They doesn’t think, discern, deduce.

^THIS^

Lol. The auto-correct mistake above is a great example of AIs not really thinking. I apparently misspelled ‘don’t’ (probably put the apostrophe in the wrong place which happens a lot when typing with my thumbs) and Apple’s ‘Intelligence’ autocorrects the word to the first-person, singular violating the subject-verb agreement.

Once again, man’s attempt to lean unto his own understanding will be what destroys man. You can split hairs to insanity.

It has the same sort of feel, in Karhunen-Loeve space. Think of it as the same sort of stuff going on, feelings-wise, so they’re neighbors in response.

People with an interest in Jew/not-Jew distinctions won’t see them as neighbors and operate in a different space, with its own idiocies but not this particular one.

Could you explain the math or how Karhunen-Loeve applies? While my background is engineering, I’m not getting how the theorem applies.

(By the way, I’m not the one who down voted your post. I don’t think I can up or down vote on it until I understand it. )

The Karhunen-Loeve expansion reduces a set to the few eigenvectors with the largest eigenvalues, “what essentially is going on” with much fewer variables. You can compare those eigenvectors from one text with those eigenvectors from another text and find that they’re neighboring or far apart, and so related or not. Basically you drastically reduce the dimension of the space that text occupies.

Thank you!

Artificial intelligence is not sentient. It’s not particularly intelligent either

The most important sentence right up front.

But, AI is being programmed by elitists. And elitists believe – with absolute certainty – that they are right. And this is a computer, so it could never be wrong. Right?

Fascinating.

You’re much more patient than I am.

Thank you for this.

It did require patience. I was asking myself if the effort is worth it as I was doing it. But I think it’s a worthy exercise.

what does it matter??

the j ews will be blamed by the world

I don’t care what I’m blamed for, but I do want to survive.

Never, repeat never, trust anything coming out of a computer. Always double check with primary sources. That’s one if the reasons that I have seventeen (yes, 17) bookcases in a one-bedroom apartment.

s/if/of

Drat.

AI is not adept at current politics.

But if it is specifically trained to search Leftist sites to answer a query, it will spit out biased info.

Is Elon Musk turning on Israel?

He is without doubt a very strong supporter of Tucker Carlson who tries to push Tucker Carlson.

It is entirely possible that Grok is deliberately programmed this way to blacklist any pro-Jewish or Jewish accounts.

Danny,

There were a number of terribly antisemitic responses by Grok this past month. Then it was allowed to die down. Musk doesn’t do Grok’s programming, but many of the San Franciscan programmers (some of who may have been moved to TX in the X headquarter relocation) may well have a beef with Israel.

I have asked ChatGPT to only use conservative sources in certain queries.

Media bias also isn’t what turned the current war into a potential extinction event for Israel, what did it is Israel refused to acknowledge the possibility of Western Public Opinion turning due to media bias was extremely predictable, and performing Sitskrieg made it inevitable.

It is the end of August 2025.

The attack was in 2023

This war should have been long done before the inevitable public turn. How Netanyahu thought he had unlimited time for sitskrieg can only be defined as insanity.

Israel was attacked by the elected government of Gaza and its military.

IMO they could legitimately have immediately incinerated the place. However, every day they hesitated their media position deteriorated. Furthermore, a simple look at demgraphics appears to give Hamas 50+K new fighters every year. They just can’t kill them fast enough. Losing a thousand soldiers to get a few handfuls of hostages back does not seem like a good strategy. Perhaps destroying any Egyptian barriers, expelling the Gazan population to the Sinai and leveling Gaza would be an option.

I think then we are agreed time is a luxury Israel absolutely does not have because of the western media environment but it is the resource it spent most because of Israel’s own indecisiveness.

Initially, they were held back by the Biden administration. They also have the difficult task of not destroying their hostages. The Anti-Israel hoards were already queued up on Oct.8.

I see video of a drone flying around and then a car on fire but I don’t see a confirmed drone strike. For all we know that driver freaked out because of the drone and hit a pole then burst into flames. Not saying that is the way it happened but unless I’m seeing the drone actually hit a vehicle I’m skeptical. I also find it hard to believe that no one around the world in the media picked this up? No one?

In fairness, they’re both major money pits.

I’m not anti-AI, but I am wary of how we integrate and weigh its “opinions”.

I’ve seen similar to this in more benign settings with ChatGPT and working on a curriculum.

I fed it a source (a university catalog which has conflicting info due to update errors) and tried to get it to understand that it was making mistakes compared to the reality of the university’s curriculum. It was unable to ever get it right even when it made changes.

The AI is being trained to unweigh human prompt inputs because its programmers probably think the user is too stupid to know what is right. You can write apocalyptic sci fi (could even be completely apolitical) that starts with this prime.

Also, how is it going to affect people with mental disorders who aren’t sure what is right and real vs wrong/fiction?

It won’t. They’ll still vote Democrat.

I’m beginning to believe that AI means “Artificially-Induced Idiocracy.” The length that the article author and her cohorts had to go to get any acknowledgement of the possibility that the AI regurgitation was incorrect pretty much ensures that most will not spend the time nor effort to prove there is a rabbit down the hole. As our son once counseled our daughter about a potential inquiry into their weekend party while we parents were away, “deny, deny deny.’

As truth continues to be moved from the objective to the subjective, more fun times are surely ahead.

Thank You Katya.

The Hamas Health Ministry is a common source of information for much of the lib media like NPR, NYT, and CNN. As long as a terrorist organization is a trusted source of info for the media, AI effectively becomes a terror spokes-thing.

Grok is a Large-Language Model (LLM). LLM’s are not intelligent although it can seem as though one is conversing with something intelligent for a while. However, they always fail the Turing Test. The Turing Test is whether YOU are convinced you are conversing with another human. LLM’s cannot pass that test. LLM’s are like predictive text (the words your phone suggests when you’re writing a text message) on steroids. Recently, a computer science professor referred to LLM’s as “stochastic parrots” because they stream together words based on how often they appeared together in their training data. As Smitty said, not sentient, and also not intelligent. Just a software toy.