VIDEO: Discrimination By Algorithm – the “ultimate showdown between equity and equality is going to take place at a technological level”

Webinar on the pressures to use technology to achieve “equitable” group results. Supposed “bias free” algorithms and AI will, in fact, be stealth quotas hidden out of sight: “the showdown between equity and equality through algorithms, machine learning, and artificial iintelligence will not be televised.”

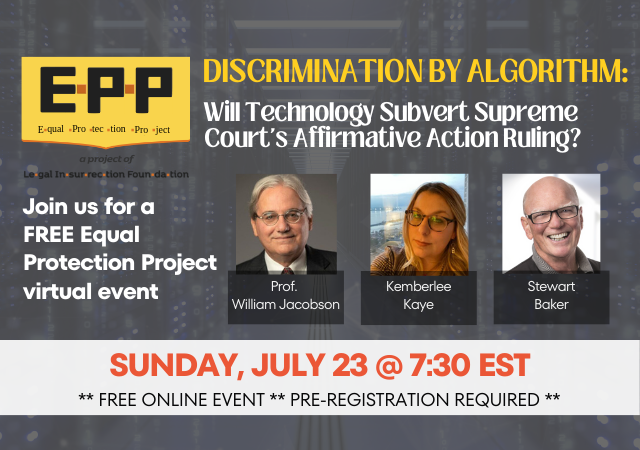

Discrimination by algorithm and Artificial Intelligence to achieve stealth quotas was the subject of an online webinar sponsored by the Equal Protection Project on Sunday, July 23, 2023.

The webinar explored how technology already is and will be used to achieve stealth quotas, as part of a broader effort to “equity” group results agenda and evade the Supreme Court’s ruling against affirmative action. Building “equity” into algorithms and Artificial Intelligence in a variety of information decisions (employment, financial, and others) on the premise that anything other than proportional group representation is bias, is a major Biden adminitration objective. Supposed “bias free” algorithms will, in fact, be biased in favor of quotas. Proposed bipartican legislation supported by Republicans may make matters worse.

The full video and a partial transcript are below.

Background

You may have learned about the use of algorithms to manipulate pools of job applicants through our exposé about LinkedIn’s ‘Diversity in Recruiting’ function. But it’s not limited to LinkedIn. Already there is tremendous pressure in many places to manipulate alogirithms and Artificial Intelligence to achieve predetermined racial, ethnic, and sex quotas. This pernicious discrimination is unseen and generally unknown to job seekers, and even in many ways to recruiters/employers. These stealth quotas will be part of the efforts to evade the Supreme Court’s ruling striking down race-based affirmative action. What once was done in plain sight will be done out of sight. You have been warned.

About the speakers:

Stewart Baker is Of Counsel to Steptoe & Johnson LLP in Washington, D.C. His career has spanned national security and law. He served as General Counsel of the National Security Agency, Assistant Secretary for Policy at the Department of Homeland Security, and drafter of a report reforming the intelligence community after the Iraq War. His legal practice focuses on cyber security, CFIUS, export controls, government procurement, and immigration and regulation of international travel. He is the author of Stealth Quotas – The Dangerous Cure for ‘AI Bias’.

William Jacobson is the founder of Legal Insurrection Foundation, which was created in 2019, and the Legal Insurrection blog, which he launched in 2008. a Clinical Professor of Law and Director of the Securities Law Clinic at Cornell Law School. He is a 1981 graduate of Hamilton College and a 1984 graduate of Harvard Law School. Prior to joining the Cornell law faculty in 2007, Professor Jacobson practiced law in New York City (1985-1993) and Providence, Rhode Island (1994-2006), A more complete listing of Professor Jacobson’s professional background is available at the Cornell Law School website.

Kemberlee Kaye is the Operations and Editorial Director for the Legal Insurrection Foundation. She is a graduate of Texas A&M University and has a background working in immigration law, and as a grassroots organizer, digital media strategist, campaign lackey, and muckraker. Over the years Kemberlee has worked with FreedomWorks, Americans for Prosperity, James O’Keefe’s Project Veritas, and Senate re-election campaigns, among others. Kemberlee is the Senior Contributing Editor of Legal Insurrection website, where she has worked since 2014. She also serves as the Managing Editor for CriticalRace.org, a research project of the Legal Insurrection Foundation.

The full video is embedded at the bottom of the page.

PARTIAL TRANSCRIPT

Here are some short excerpts from the event, selected by each of us (with time stamp where it appears in the video):

WILLIAM JACOBSON

(00:02:46): The topic tonight is, at one level, not new. At another level, it’s new. It’s the fight we’ve been having and was recently resolved by the Supreme Court over equality versus equity. Equal protection versus equity, which is not equal protection, which is treating people differently based on race and other characteristics.

(00:10:30): So this has the possibility of really manipulating the system from every direction. And if you see, and I, I think Stewart might get into this more, but if you see what the current administration, the Biden administration has been putting out, they have various initiatives for equity in algorithms and equity in artificial intelligence. And so what they’re saying is that they want to see not the process. They want to see, not that the process treated an individual fairly, they want group results. They want exactly what diversity, equity, and inclusion is on your typical university campus. They want quotas basically. And that can’t be achieved above the surface anymore because the Supreme Court has said it’s unlawful, and that’s resolved. But it will take place behind the scenes.

(00:11:59): So I think in many ways, a lot of the fights we’ve been having, while extremely, extremely important, are really just the prelude to the ultimate showdown between equity and equality, which is going to take place at a technological level. The showdown between equity and equality through algorithms, machine learning, and artificial intelligence will not be televised, so to speak. And it’s something that I think we need to address.

(00:51:10): I think there’s also an opportunity for various states to do with this problem, what they have done with regard to critical race theory and diversity, equity and inclusion, to use their market power to say you can do in Illinois whatever you want. We can’t stop you, but you’re not goingdo it in Florida or, you know, uh, Idaho or Texas. Um, and, and companies will respond to that. I think that if you were to require some senior official of the appropriate company, and I’m not sure if it’s the company that produced the algorithm or whatever it is that, you know, to sign under penalty of perjury Yeah. That this product has not used race and other factors to alter the results of its program. I think, I’m not saying it’s gonna stop this in its tracks, it’s not, but that would go a long way.

STEWART BAKER

(00:14:16): Artificial intelligence — it’s immensely accurate. It’s far more accurate than any individual human could be. That is its power. And that is why artificial intelligence is being used more and more for decisions that were previously made by bureaucracies. Sooner or later, it’s going to learn to think like the experts and then to begin to find answers that even the experts don’t see. So that is why it’s immensely powerful and why we’re going to see it everywhere. But the first problem with that is that machine learning and artificial intelligence is famously unable to tell you how it arrived at the answers it has arrived at.

(00:17:30): People who worry about bias, who worry about equity in particular, have said, “I don’t really trust these machines to make unbiased decisions.” And … all they can do is look at the outcomes. And so we very quickly descend into a world where people who believe in equity say, “If this has a disproportionate impact on protected groups, then it must be biased.”

(00:18:40): As a practical matter, the academic literature defaults to group justice and to equity — to saying, “Any algorithm that produces results that are not roughly proportionate to the representation of protected groups in the overall population is a biased algorithm.”

(00:22:06): “If it doesn’t produce group justice, we’re going to tell the machine it got it wrong: Even though it picked a candidate who was more likely to succeed, it did not pick a candidate who was more likely to contribute to equity and diversity in the workforce. “That’s a wrong answer. And we’ll just keep doing that until we get a set of recommendations that fits our notion of what’s a proper proportionate representation of protected groups.” [But] you’ll never see that because these machines don’t explain themselves after they’ve been trained, they don’t say, “ I was told I couldn’t do that.” They just say, “Here are the very best candidates, and they will be the very best candidates within the constraint.”

(00:24:18): What you’re seeing across the country is individual states, individual cities, and ethical standards being promulgated that say, “Above all, your artificial intelligence algorithm must not be biased. You need to get a special group of professionals to determine whether it’s biased. And those professionals all have to be representative of all kinds of groups and, usually, believers in equity. And they will evaluate this [bias], they will fix [it], and then you will have to tell us that you are not using a biased algorithm to make your decisions.” …. I would say that in the area of artificial intelligence, …, preventing bias… in artificial intelligence is almost always going to be code for imposing stealth quotas.

(00:26:37): The House Commerce Committee is producing a bill that almost every Republican on that committee has supported. Mostly it’s about privacy. It has one section on artificial intelligence and bias in which it says, “You may not process private information [with AI] if it produces harm. And harm is expressly defined as [having] a disparate impact on the basis of an individual’s race, color, religion, national origin, sex or disability. Disparate impact is code for “not proportional to their representation in the population.” …. This is a Republican supported bill that is saying you may not use algorithms unless they produce stealth quotas.

(00:46:39): Corporate America has consistently supported quotas, because it guarantees them that they’re not going to be sued. I’m sure that one of the explanations for how a Republican committee could enthusiastically embrace this is that the Fortune 500 companies they were talking to said, ”Oh yeah, this would be great.”

(00:48:22): “The same phenomenon that produced pressure to build quotas in so that [companies] could claim that they were bias free can be brought to bear on the practice of building quotas in. States like Florida and Texas can start saying, “Hey, if you’re going to sell us artificial intelligence, we want you to certify that at no stage in the development of this artificial intelligence algorithm, were protected classifications used to adjust the outcome.”

VIDEO

DONATE

DONATE

Donations tax deductible

to the full extent allowed by law.

Comments

Think of the possibilities. You can now discriminate, lie to people, foster infinite amounts of propaganda, even control election results and none of it is your fault. “The computer did it” is now the new “The dog ate my homework.” It’s perfect. No one knows who wrote the programming, who installed the programming, how the programming works, etc. It’s the ultimate “Not my fault” excuse for a believing public. (sarc)

No matter what measures are taken to ensure IQ tests are fair, the left argues that they are biased. I propose a proxy impervious to such an accusation. It has been known since the study of Victorian era reaction time tests that reaction time correlates with general intelligence. Administer reaction time tests to college and job applicants, and then stand by the results. The machine can have no bias and its one measurement is not susceptible to cultural influence. I await the screams.